Artificial Intelligence - Product or Consumer?

Review

Sayak Dasgupta

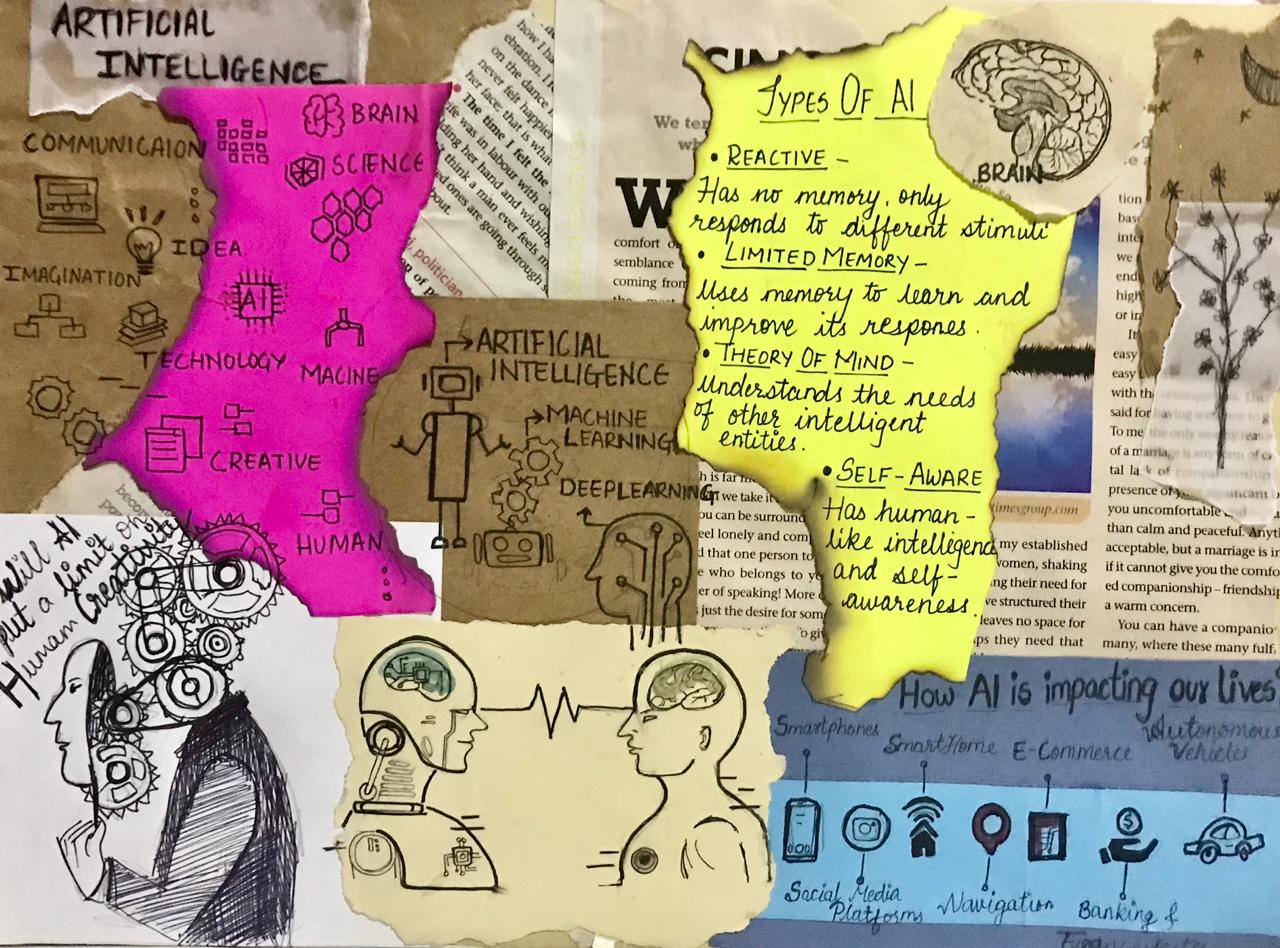

Credits: Ritika Khandelwal, The Decadents magazine

Credits: Ritika Khandelwal, The Decadents magazine

"If you are not paying for the product, you are the product!" – The Social Dilemma.

Tweet

Alan Turing’s ‘Turing Test’ can be argued to be the foundation stone of the concept of Artificial Intelligence and Machine Learning. The proposal made by Turing was to find out whether machines can think. Fast forward to the modern era, cognitive scientists and AI experts are concerned with the extent to which this thinking process can be imbibed in machines and whether these are capable of learning new things on their own. Both ancient Eastern and Western philosophers would argue that the ability to think and consciousness are features that are exclusive to human beings. Today, when we refer to AI, it is safe to say that it is a mechanical tool that exhibits intelligence in a way human beings and animals are supposed to.

Artificial Intelligence is rife with contradictions. It is a powerful tool that is also surprisingly limited in terms of its current capabilities. And, while it has the potential to improve human existence, at the same time, it threatens to deepen social divides and put millions of people out of work. While its inner workings are highly technical, the layman should try and comprehend the non-technical part. This article aims to deal with the basic principles of how AI works and its concerns.

There has been an unprecedented rise in investment into Artificial Intelligence, its applications and research in contemporary times. Amidst all of that, the ‘ethical employment of AI' has been a central point of various debates. Questions have been raised to analyse the policy frameworks that revolve around the same. With Covid-19 acting as a catalyst, society has essentially become more and more dependent on the use of technology, despite its legitimate concerns coupled with the social discomfort it causes. Some of AI's business applications have resulted in the loss of jobs, the reinforcement of biases, and infringements on data privacy.

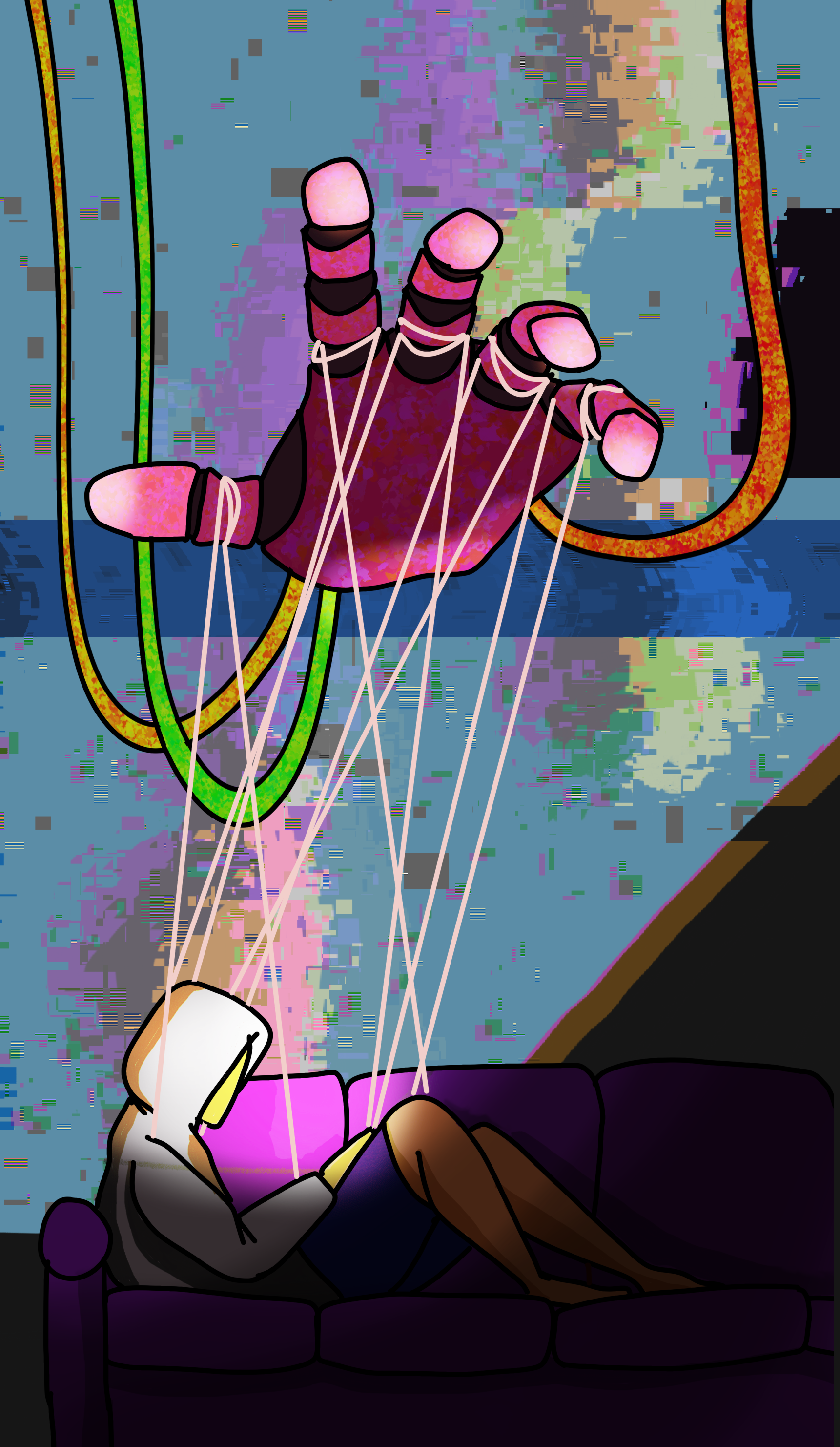

Credits: Arunima Vadlamannati

Credits: Arunima Vadlamannati

With the advent of self-driving cars, it is likely that AI cars will soon replace cab drivers and other public and private transportation employees. In 2015, Google Photos was under the scanner for labelling a black software engineer and his friends as “gorillas”. This embarrassing technical glitch illustrates the difficulties in advancing image-recognition technology, which the tech companies hope to engage in self-driving cars, personal assistants, and other products. Next, we have the issue of infringement on data privacy. Now companies like Google claim that their mission is “to organise the world's information and make it universally accessible and useful.” However, reading between the lines, we see a darker motive for monetising this global data.

Google's master plan can be summarised as the ‘substantial customer acquisition strategy'. Through this scheme, the company could attract a large consumer base by allowing a larger incentive for them – free access to the World Wide Web. Along with this, the tech giant acquired platforms like YouTube, Android, and dozens of others, which provided them with a unique data empire to enhance, protect, and enable its monetisation in ever-expanding applications. The data that Google and companies alike gather about its users are then run in algorithms with the help of machine learning and Artificial Intelligence. By selling the data to advertisers, companies monetise our private and public data.

To gain a first-hand experience of the same, I googled ‘Graduate Study in the UK and US'. I visited around seven web pages of different educational institutions based in those regions and spent more time on the Language Requirements and Funding pages specifically. Since then, whenever I went online, my Facebook wall and YouTube were all filled with advertisements of different universities from my desired regions; IELTS training; student loans, and scholarships for studying abroad. From this, I could only infer that it is not just my browser history that was being tracked, but also which page I visited and for how long, and probably more. And based on the cumulative data collected about me, the Google algorithm suggested the advertisements based on my interests. This incident may be seen as a daily affair in current times. More and more people are aware of this function of the internet. However, not many raise a red flag about this function.

However, on the negative side, there are well-documented cases of abuse resulting from covert data collection. For example, in 2020, Clearview AI, a US-based start-up that compiles billions of photos for facial recognition technology, said it lost its entire client list to hackers. According to them, the intruder "gained unauthorised access” to its customer list, including police forces, law enforcement agencies and banks. The company came under the radar in January of 2020 after a New York Times investigation revealed that Clearview AI's technology allowed law enforcement agencies to use its technology to match photos of unknown faces to people's online images. The company also retains those photos in its database even after internet users delete them from the platforms or make their accounts private. Such employment of AI has immense potential to cause a stir in civil society. Eric Goldman, an expert of IPR and cyber laws, speaking to the NY Times, said, "The weaponization possibilities of this are endless … Imagine a rogue law enforcement officer who wants to stalk potential romantic partners, or a foreign government using this to dig up secrets about people to blackmail them or throw them in jail.” (Hill, 2020)

The journalist investigating the case wrote, “While the company was dodging me [during the investigation], it was also monitoring me. At my request, a number of police officers had run my photo through the Clearview application. They soon received phone calls from company representatives asking if they were talking to the media – a sign that Clearview has the ability and, in this case, the appetite to monitor whom law enforcement is searching for.” (ibid.) This suggests that these companies employ AI to raise red flags when the company's interests are at stake. The company did not reveal the foolproof mechanism about their AI utility and other technical modalities. Still, it might be anticipated that they put in some advanced AI and machine learning codes in place to manage its pool of data, along with the user interface.

Companies that are involved in data collection and analysis need to address these issues sooner or later. Be it the Facebook algorithms, Google ads, or companies that deal with facial recognition technology, all of them have an added responsibility to not delegate the moral responsibility of humans to machines. Artificial Intelligence is not a free ticket that allows such companies to escape from their ethical accountability. Yes, computation should help us make better decisions. Still, organisations and freelancers must own up to their moral responsibilities to judgement. Algorithms should be employed within this framework, not as a means to abdicate and outsource the duties of the stakeholders. It is imperative to hold on ever tighter to human values and ethics as the Fourth Industrial Revolution unfolds.

Sayak Dasgupta is currently pursuing his Undergraduate in Philosophy from Jadavpur University. His primary areas of interest are Ethics and its application to War, Nuclear Weapons, Artificial Intelligence and Justice.

signup with your email to get the latest articles instantly