Insight Digest | Issue #6

In Insight Digest, we showcase the latest happenings in science research.Thermal-breach memory: Harnessing metastability for sensing to artificial neurons

Aniket Bajaj, Satyaki Kundu, Shikha Sahu, D. K. Shukla, Bhavtosh Bansal. Appl. Phys. Lett. 127, 021902 (2025) (Department of Physical Sciences, IISER Kolkata) Keywords: Phase transitions, Strongly correlated electron systems, Nonvolatile memoryIn everyday life, memories often surface in unexpected and seemingly random ways. Interestingly, various forms of memory have also been identified in the physical world—ranging from magnetic systems and shape memory alloys to emerging fields like neuromorphic computing. In these systems, "memory" refers to the dependence of a system's current state on its history, often manifesting as multivalued or path-dependent behaviour. A system that has not yet fully relaxed to equilibrium can retain information about its creation or prior states, while one that has reached equilibrium loses all traces of its past—the act of equilibration effectively erases its memory.

Recently, our group, led by Prof. B. Bansal, has identified a novel form of memory in phase change materials, coined as thermal-breach memory. Initially, in collaboration with Prof. D. D. Sarma’s group at IISc Bangalore, we discovered this phenomenon in a well-known halide perovskite material (MAPbI3) which has thermally-induced phase change at low temperatures (~ 150 K). And later, by recognizing the broad potential of this memory effect; we collaborated with Prof. D. K. Shukla’s group at UGC-DAE CSR, Indore; to establish this fundamental concept for room temperature applications using another phase change material, vanadium dioxide (VO2).

These materials exhibit a key characteristic during their phase transition—hysteresis—where the phase fraction follows different paths depending on whether the external parameter (in this case, temperature) is increasing or decreasing (see figure). Memory effects require the system to be in a non-equilibrium state, which corresponds to the hysteretic (metastable) region. And we found that when the system is held within this coexistence region at a constant temperature, the metastable phase becomes "frozen," showing no further progression toward the stable phase. If some perturbation is given to these isothermal conditions, one can track the breach by monitoring the corresponding behaviour of the frozen phase because its response to the given perturbation depends on the history of its creation (prepared while heating or cooling, see figure). This distinct behaviour enables the detection of thermal breaches as small as one degree.

Thus, athermal states in phase change materials enable thermal-breach memory, by detecting small thermal breaches via distinct response of its phase fraction, offering stable, sensitive operation for sensor and neuromorphic applications.

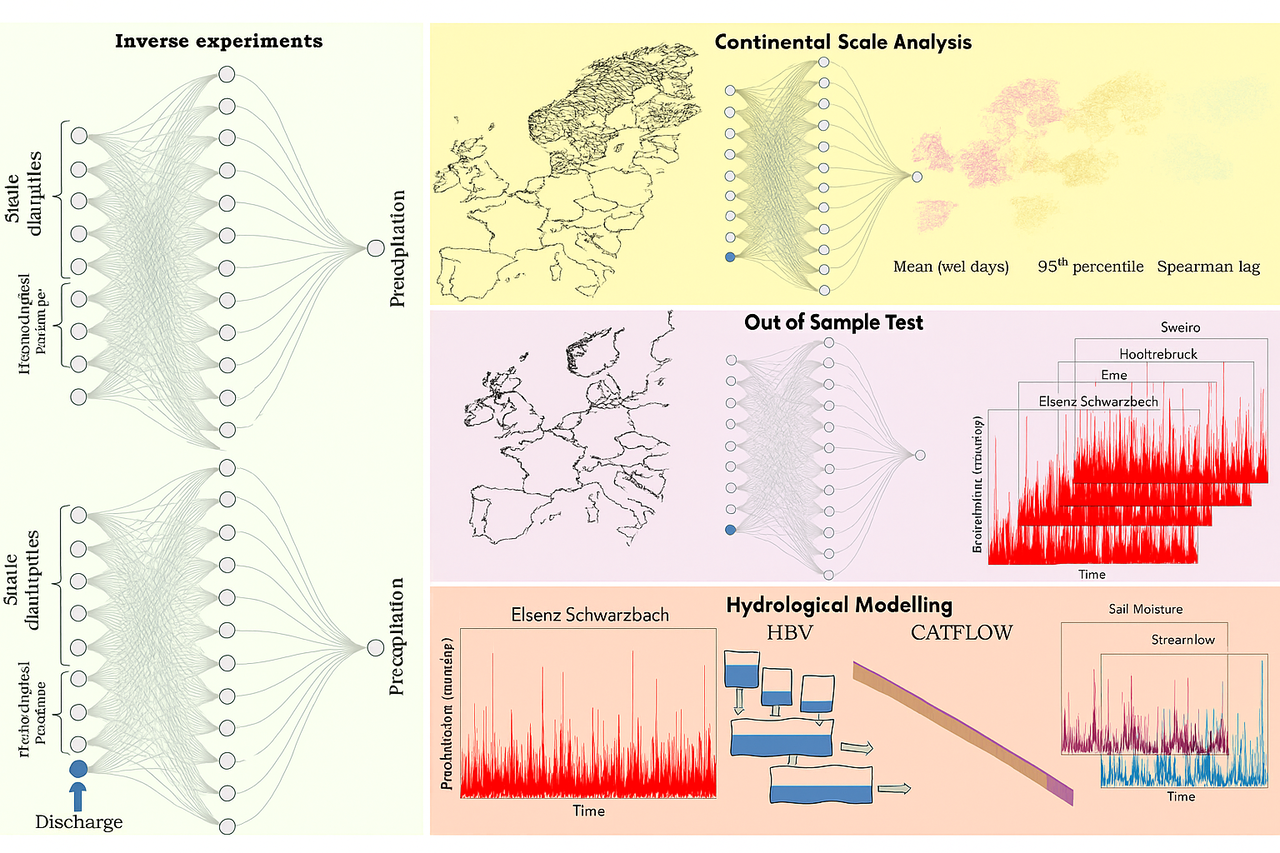

Listening to Rivers: How Stream flow Can Help Us Understand Rainfall Better

Dean, J.F., Coxon, G., Zheng, Y. et al. Old carbon routed from land to the atmosphere by global river systems. Nature 642, 105–111 (2025) (Department of Physical Sciences, IISER Kolkata (20MS)) Keywords: River CO#sub[2] emissions, Radiocarbon dating, Global carbon cycleUnderstanding rainfall is key for managing water, predicting floods, and studying climate—but measuring it directly is tricky. Rain gauges and satellites often miss details or have errors. This paper explores a creative idea: can we use the water flowing out of rivers (stream flow) to better estimate how much rain actually fell in a region? In other words, can we trace rainfall backward from the river’s response?

This sounds simple but isn’t. Stream flow depends not only on rain, but also on evaporation, soil moisture, and groundwater—all of which mix together in complicated ways. Many different rain patterns can produce similar river flows, so working backward to find the exact rainfall is impossible. Instead, the researchers aim to estimate the average rainfall over a catchment, which is more realistic and useful.

To tackle this, they use a type of artificial intelligence called an LSTM (Long Short-Term Memory) neural network. It’s good at handling time-dependent data, like how rivers respond to rain with delays. They train two models: one predicts rainfall using weather data such as temperature and humidity, while the other adds river discharge information. Comparing the two shows whether including river data actually helps.

The results are promising. The model that includes streamflow performs about 20% better in estimating rainfall, especially in areas with good data. However, it slightly underestimates rainfall overall, meaning some adjustments are still needed. When these improved rainfall estimates are used in hydrological models, they better match real-world observations of soil moisture and floods—showing that this method captures important water-cycle behavior.

The authors note that the approach relies on good-quality data and could benefit from newer AI techniques in the future. Still, their work demonstrates a powerful concept: using river flow (the effect) to learn about rainfall (the cause). It’s a fresh way of combining machine learning with hydrology—looking at the water cycle in reverse—to improve rainfall estimates where observations are limited.

In short, the study shows how “listening” to rivers can help us better understand the rain that feeds them, offering a smart and practical step forward for water and climate science.

What do the fundamental constants of physics tell us about life?

Mehta, Pankaj, and Jane Kondev. What do the fundamental constants of physics tell us about life?. arXiv preprint arXiv:2509.09892 (2025). (Department of Physical Sciences, IISER Kolkata) Keywords: Biological Physics, Earth and Planetary Astrophysics, Statistical MechanicsIn this extraordinary work by Pankaj Mehta and Jané Kondev, physics reaches toward the living world with the same audacity Weisskopf once used to estimate the height of mountains. Half a century ago Weisskopf showed that with just six physical constants—ℏ, c, e, mₑ, mₚ, Gₙ—the universe allowed one to estimate densities, mountain heights, stable matter. But life was missing from that program. This paper attempts the most radical extension: to put “life” itself inside the Weisskopf reasoning game. To ask: how much of biology can be read directly from quantum constants? How much inevitability is buried inside the Rydberg energy? How much universality does α, mₑ / mₚ impose on metabolism and replication?

Life on Earth is, fundamentally, electrons falling downhill toward lower energy states. Szent-Gyorgi called life a high-energy electron searching for a place to rest — this paper takes that statement literally and quantitatively. The authors argue that three essential biological numbers — growth yield, minimum possible doubling time, and the minimum maintenance power of dormancy — can all be derived from fundamental constants plus a few geometric/structural fudge factors. The Rydberg energy (~13.6 eV) and Bohr radius (~0.53 Å) define the quantum base units of chemistry. Thermal fluctuations (kBT ~ 25 meV) are orders of magnitude lower — this gigantic asymmetry explains why chemistry is stable, why catalysts exist, why biological time must scale between these two worlds (quantum & thermal).

From this, they estimate the yield Y: mass (g) produced per Joule consumed. Using only α, Ry, molecular atomic numbers and bond fudge factors, they get ~10⁻⁵–10⁻⁴ g/J — astonishingly matching microbes on Earth. This, they argue, may be universal, even for alien life, because it is not exponentially sensitive to activation energy — unlike kinetics.

Minimum doubling time emerges from the interplay of quantum-set minimum viscosity (Berg viscosity) and diffusion-limited kinetics. With Arrhenius activation barriers of 0.4–1.1 eV, one gets doubling times from seconds to geological years — perfectly spanning Vibrio natriegens (~10 minutes) to deep-ocean microbes (~years). And finally, dormant power — arises simply from thermally-opened membrane pores leaking charge requiring pumping — giving ~10⁻¹³ –10⁻¹⁵ J/s/cell, exactly the natural order biological measurements report.

The result is profound: evolution explores details, but the gross “possibility space” of life is already sharply bounded by physics itself long before chemistry, DNA, or selection. The production of biomass, the speed of replication, the lowest energy to stay alive — are already written in the constants of nature. Growth yield is the least free, most universal property of life. This is not biology derived from empirical catalogs — this is life emerging almost poetically from ℏ, α, mₑ, mₚ. Physics gives life a skeleton before evolution gives life a story.

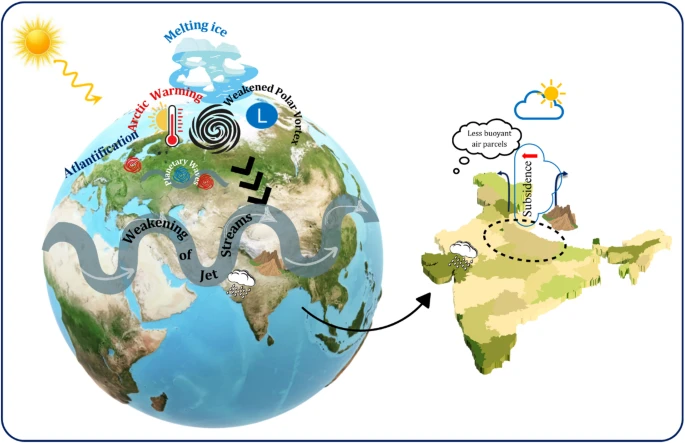

From the Arctic to India: Understanding How Barents–Kara Sea Ice Influences the Monsoon

Sardana, D., Agarwal, A. Impact of spring sea ice variability in the Barents–Kara region on the Indian Summer Monsoon Rainfall. Sci Rep 15, 37790 (2025) (IIT Roorkee) Keywords: Arctic Sea Ice, Barents–Kara Region, Indian Summer Monsoon, TeleconnectionsThe Arctic is warming faster than any other region on Earth, and one of the clearest signs of this rapid change is the steady decline of sea ice. Among the various Arctic seas, the Barents–Kara (B–K) Sea, located north of Russia, has been identified as a particularly sensitive region where warming and ice loss occur rapidly. This study explores how variations in spring sea ice in the B–K region influence the behaviour of the Indian Summer Monsoon Rainfall (ISMR), which is vital for the climate, agriculture, and economy of India.

To investigate this link, the researchers examined climate data spanning more than six decades, from 1959 to 2021. They created a springtime sea ice index for the B–K region and identified years with unusually low and unusually high sea ice. They then analysed how the monsoon behaved during the following summer in each of these contrasting years.

The study found a clear and consistent relationship between spring Arctic sea ice conditions and the strength of the Indian monsoon. During years with low sea ice in the B–K region, the exposed ocean water absorbs more heat, warming the lower atmosphere and reducing surface pressure. This thermal and pressure imbalance helps generate disturbances that travel across continents as large-scale atmospheric waves. These waves modify the position and strength of the subtropical westerly jet stream during the Indian monsoon season. In low-ice years, the jet stream shifts slightly southward and encourages sinking air over northern India, especially across the Indo-Gangetic Plain. This downward motion suppresses the vertical movement of moist air, limits cloud development, and ultimately weakens monsoon rainfall in one of India’s most important agricultural zones.

In contrast, years with higher-than-usual spring sea ice show an opposite response. A colder Arctic surface supports higher pressure and different atmospheric wave patterns, which help strengthen upper-level divergence and promote rising motion over northern India. These conditions favour stronger monsoon circulation, enhanced moisture transport and increased rainfall, particularly in the Indo-Gangetic Plain and parts of Northeast India.

The findings highlight how tightly connected the world’s climate systems are. Though India’s monsoon is strongly influenced by tropical factors such as the Indian Ocean, ENSO, and land–sea thermal contrasts, this research demonstrates that conditions in the faraway Arctic also exert an important influence. This has significant implications for water resources, agriculture, and long-term planning. The study suggests that including Arctic indicators in monsoon prediction models could help improve seasonal forecasts and deepen our understanding of global climate linkages.